About

UTS Tech Festival (23 June – 04 July, 2025) is a two weeks festival of events, showcases, hackathons, workshops, masterclasses, seminars, competitions, industry-student engagement and much more! Our goal is to bring together students, academics and industry to foster learning, inspiration, share ideas and promote innovation.

Come along to the UTS AI Showcase which is part of the UTS Tech Festival 2025, to discover Artificial Intelligence, Deep Learning, Reinforcement Learning, Computer Vision, NLP, and Vision-Language fusion projects!

This the fifth iteration of the UTS AI Showcase that showcases Artificial Intelligence (AI) and Deep Learning projects developed by undergraduate, postgraduate, and higher degree research (HDR) students from the Faculty of Engineering and IT (FEIT).

Venue:

UTS Faculty of Engineering and Information Technology

Broadway, Ultimo, NSW 2007, Australia

Date and Time:

Tuesday, June 24, 2025, 3.30pm – 7.30pm

Showcase Winners

Best Project:

Innovation/Research

Team: GuardianAI

Team Members:

- Shudarshan Kongkham,

- Abdullah Bahri,

- Hayrambh Monga

Best Project: Usecase/Application

Team: SecondSight

Team Members:

- Kamatchi G

- Rozhin Vosoughi

- Anna Huang

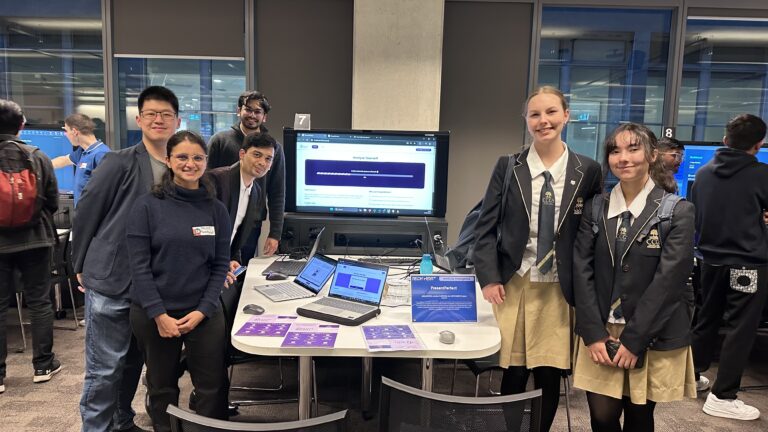

People Choice Award:

Team: PresentPerfect

Team Members:

- Aditya Maniar

- Jonathan Elian Sjamsudin

- Kavi Varun Sathyamurthy

- Saloni Samant

ACS Industry Choice Award

Team: Solar Shield

Team Members:

- Kush Mukeshkumar Patel

- Deep Patel

- Shruti Agarwal

DroneShield Industry Choice Award

Team: TrustRoute

Team Members:

- Jovan Popovic

- Benedict Poon

- Alan Chen

- Quang Minh Nguyen

Photo Gallery

Showcase Program

Showcase Program

Participating Teams

Computer Vision Projects

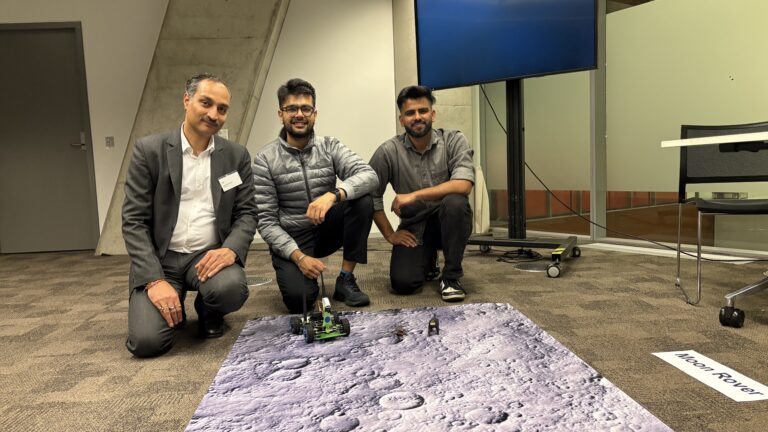

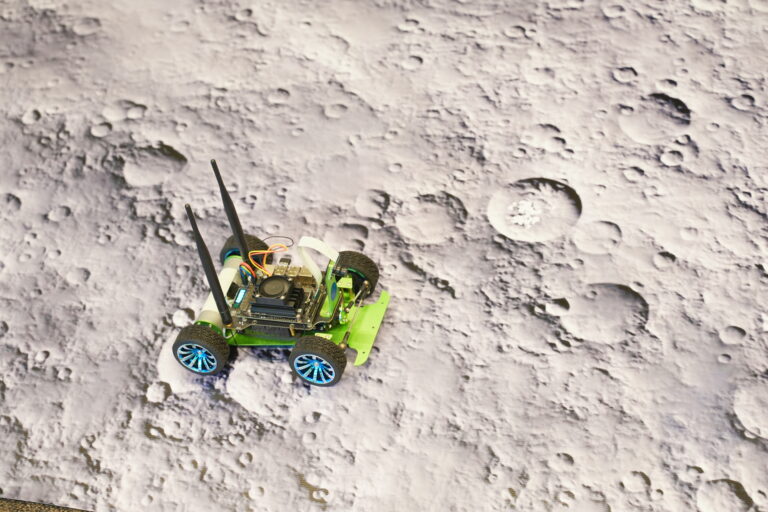

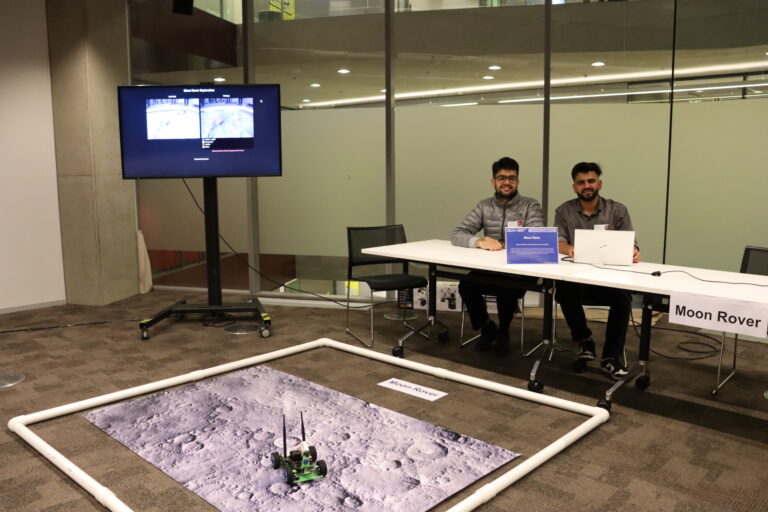

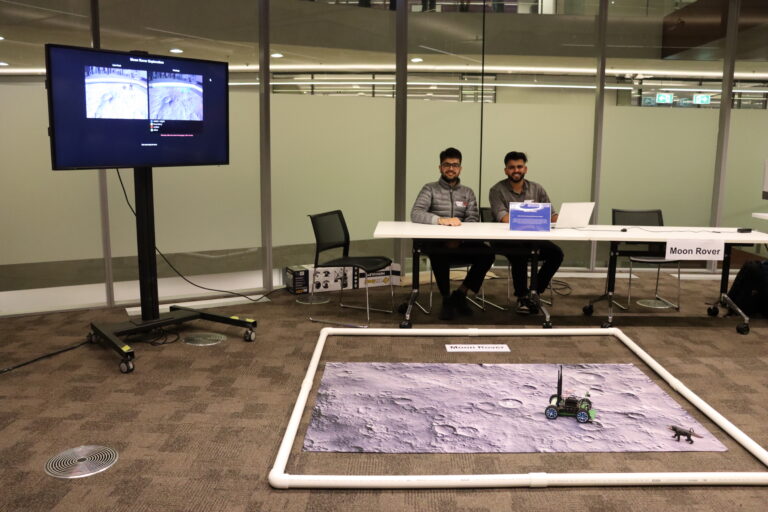

Moon Rover

This project focuses on building a real-time object detection and terrain classification system tailored for a simulated lunar exploration environment. Utilizing the JetRacer platform powered by NVIDIA Jetson Nano, the system integrates autonomous navigation with computer vision to support remote terrain analysis. A custom image dataset was developed through controlled indoor experiments designed to mimic extraterrestrial surface conditions.

Subject: 42028 Deep Learning and CNN

Room : CB11.04.205

Pod : 1

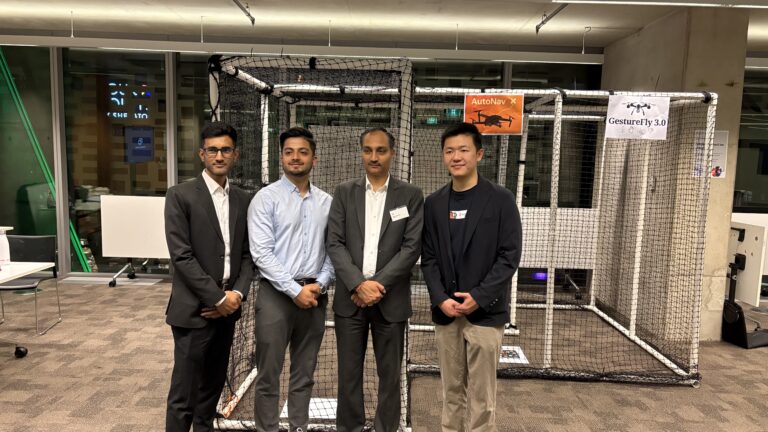

AutoNav

This project presents a lightweight autonomous drone system that uses real-time computer vision for navigation and landing in indoor environments. Using a DJI Tello drone, the system employs computer vision techniques to follow a floor path and identify a landing pad. The entire vision pipeline is integrated into a custom GUI with live video feeds and flight controls. The system navigates an abstract path, demonstrating robust indoor autonomy without GPS.

Subject: 42028 Deep Learning and CNN

Room : CB11.04.205

Pod : 2

GestureFly3.0

This project enables real-time, gesture-based drone control using a laptop webcam. A YOLOv8 model detects hand gestures mapped to commands like takeoff, landing, turns, and directional movement. A custom GUI provides live video feedback, offering an intuitive, hands-free control system for dynamic human-drone interaction. The key advantage is its accessibility and flexibility—no specialized hardware is required, making it ideal for use in a range of scenarios.

Subject: 42028 Deep Learning and CNN

Room : CB11.04.205

Pod : 1

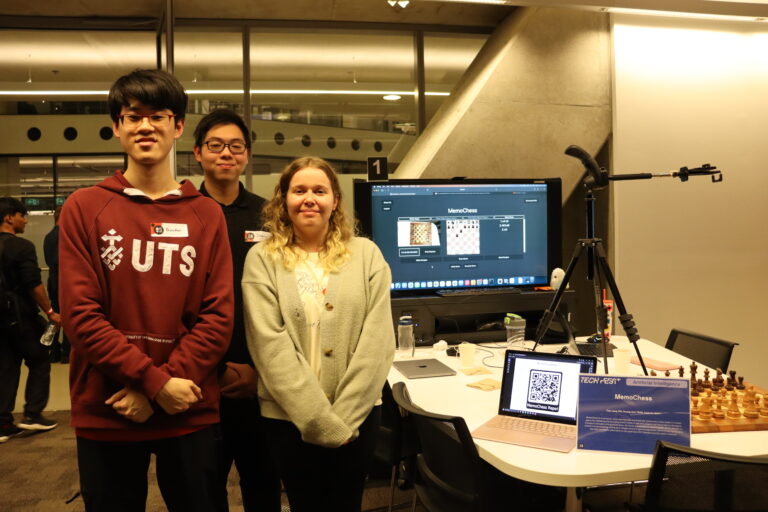

MemoChess

MemoChess is a computer vision solution that automatically tracks every move made in a chess game. By validating and recording chess moves in real time, players can focus fully on the game instead of manually writing moves down. All moves are stored in a downloadable file for players' or arbiter's record-keeping, acting as a reliable record of game progression. MemoChess serves as a low-cost, accessible alternative to the expensive DGT boards currently used in practice. It’s ideal for tournaments, coaching, and casual play.

Subject: 42028 Deep Learning and CNN

Room : CB11.04.400

Pod : 1

Drishti

The project enables visually impaired users to navigate indoor spaces safely by combining real-time object detection, segmentation, and depth estimation. It processes live video to identify obstacles, estimate distances, and provide voice-based navigation commands - all powered by deep learning and text-to-speech technology. The system is non-intrusive and cost-effective, requiring only a standard camera and headset. It enhances independence and safety, making indoor navigation more accessible.

Subject: 42028 Deep Learning and CNN

Room : CB11.04.400

Pod : 2

SignSync

SignSync revolutionizes virtual meetings by instantly translating Australian Sign Language (AUSLAN) fingers spelling into real-time captions. Utilizing advanced AI and seamless integration with video conferencing platforms, SignSync eliminates communication barriers, fostering inclusivity for sign language users. Future enhancements include support for numeric gestures and wider compatibility, significantly broadening accessibility in digital interactions. Currently integrated with Jitsi, SignSync offers an open-source, privacy-friendly solution for inclusive communication.

Subject: 42028 Deep Learning and CNN

Room : CB11.04.400

Pod : 3

VisionDrive

What if Autonomous Systems (AS) didn't require massive computational resources or specialised hardware? Our project aimed to demonstrate this by developing a Level 2 AS for driving, using a hybrid model combining CNNs and novel Liquid Time-Constant cells. Our system achieves real-time performance on consumer hardware while maintaining resilient driving capabilities in steering and speed; we provide an accompanying simulation platform, presenting researchers with an intuitive interface for real-time evaluation. This framework opens the door for more accessible & cheaper AS across industries.

Subject: 42028 Deep Learning and CNN

Room : CB11.04.400

Pod : 5

SeatScout

SeatScout is a real-time seat occupancy detection system designed to reduce the time students spend searching for seats in university libraries and study spaces. It uses CCTV video feeds and a YOLOv12-based object detection model to identify chairs and people, determining seat availability. A live dashboard mirrors room layouts, helping users locate vacant seats instantly. SeatScout improves space utilization, reduces frustration, and enhances the student study experience.

Subject: 42028 Deep Learning and CNN

Room : CB11.04.400

Pod : 6

PresentPerfect

PresentPerfect is a browser-based video-analysis tool that harnesses computer-vision techniques such as pose and gesture detection, facial-expression recognition, and gaze tracking to assess and coach public-speaking performance. Born from the common fear of public speaking, it helps students, jobseekers, and professionals practise with confidence and polish their delivery for interviews, presentations, or everyday conversations.

Subject: 42028 Deep Learning and CNN

Room : CB11.04.400

Pod : 7

Solar Shield

This project aims to develop an AI-powered drone-based inspection system designed for solar panel maintenance companies to streamline both small- and large-scale inspections. The system integrates autonomous drone technology with optical cameras and AI-driven computer vision to identify physical damage anomalies, including cracks, soiling (dust/debris), vegetation interference, shading, frame damage, and water stains or corrosion.

Subject: 42028 Deep Learning and CNN

Room : CB11.04.400

Pod : 8

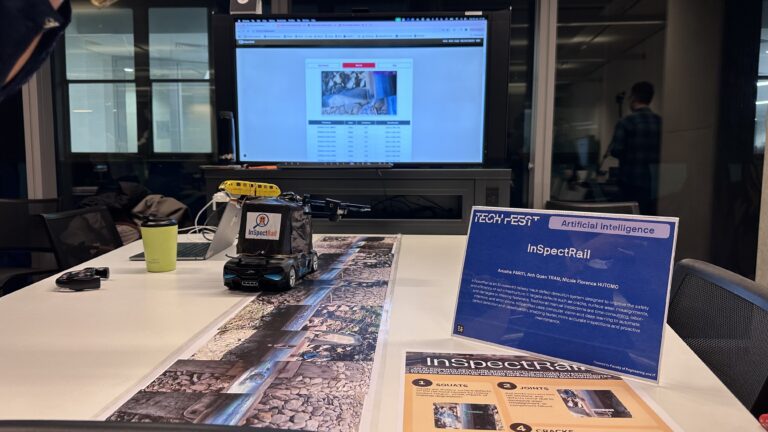

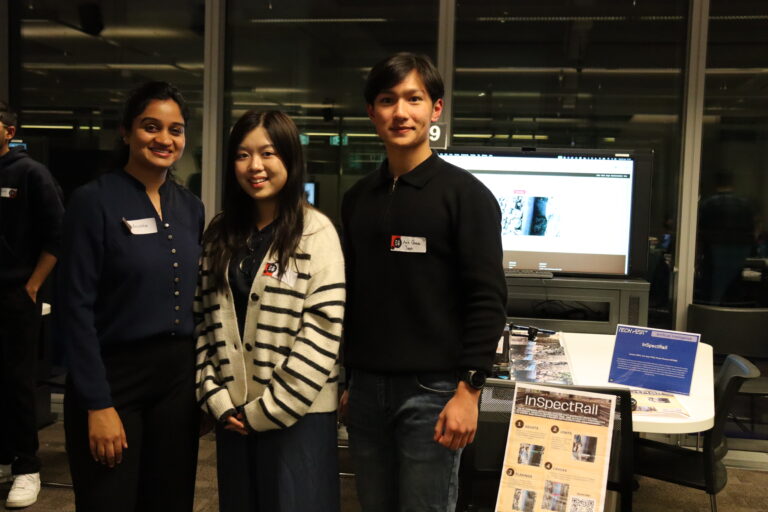

InSpectRail

InSpectRail is an AI-powered railway track defect detection system designed to improve the safety and efficiency of rail infrastructure. It targets defects such as cracks, surface wear, misalignments, and damaged or missing fasteners. Traditional manual inspections are time-consuming, labor-intensive, and error-prone. InSpectRail uses computer vision and deep learning to automate defect detection and classification, enabling faster, more accurate inspections and proactive maintenance.

Subject: 42028 Deep Learning and CNN

Room : CB11.04.400

Pod : 9

FallSafe

This project presents FallSafe, a deep learning–based fall detection system combiningYOLOv11-Pose for keypoint extraction with an ensemble of models: ANN, LSTM, and a rule-based classifier. The system processes both video uploads and live camera streams, detecting falls in real time using keypoint features.

Subject: 42028 Deep Learning and CNN

Room : CB11.04.400

Pod : 10

FireMap

Bush fires are a major natural disaster and a particular threat locally. As the 2019-2020 Bushfire season demonstrated, having accurate information on the location and extent of active bush fires is very important for boh firefighting agencies but also the public to be ware and able to evacuate safely if needed. Create an image segmentation model that can quickly identify where the fire is from imagery. Allowing agencies to avoid manual digitising, expediting the process of getting from raw captured data to actionable maps that can be shared with the public.

Subject: 42028 Deep Learning and CNN

Room : CB11.04.400

Pod : 11

WildScan

This project aims to develop an engaging mobile application for children visiting the zoo. The app encourages kids to explore the zoo by completing a fun challenge—capturing photos of 10 different animals. Using real-time image recognition, the app identifies each animal through the phone’s camera and presents interesting facts to the user. As children discover all animals, they earn a digital Wildlife Explorer Certificate, making the visit both educational and rewarding. The app enhances learning, boosts family engagement, and improves overall visitor satisfaction.

Subject: 42028 Deep Learning and CNN

Room : CB11.04.400

Pod : 12

VisionMouse

This project aims to develop a gesture recognition system that enables users to control computer actions using facial gestures detected via webcam. The motivation is to enhance accessibility and provide alternative input methods for users with limited mobility or for hands-free operation. The application can be used for controlling mouse actions, keyboard toggling, and other system functions through simple facial gestures. The problem addressed is the lack of intuitive, hands-free computer control for users who may not be able to use traditional input devices due to physical limitations.

Subject: 42028 Deep Learning and CNN

Room : CB11.04.400

Pod : 13

TrafficFootprint

This project integrates computer vision and meteorological data to investigate the relationship between urban traffic and environmental pollution. We trained the custom model based on yolov5 in real Sydney traffic scenarios to enable real time vehicle detection and classification. Using meteorological data, the system can conduct a detailed analysis of vehicle emissions and their impact on air quality. This application employs a dual-mode fusion strategy and deep sorting tracking to ensure accurate identification and lane level counting and combines DeepSORT for multi-target tracking.

Subject: 42028 Deep Learning and CNN

Room : CB11.04.400

Pod : 14

Lunge & Learn

Lunge & Learn is an AI fencing coach that identifies which footwork movement is being attempted and then provides improvements and summaries based on that attempt. It can identify the four most prominent motions within fencing by identifying where each joint in the body is. After classification, it provides visual and descriptive feedback on how that pose can be improved. Lunge & Learn aims to lower the barrier for learning to fence by providing accurate, easy and low-cost coaching.

Subject: 42028 Deep Learning and CNN

Room : CB11.04.400

Pod : 15

GuardianAI

Smart elderly safety monitoring system using real-time AI pose detection. Tracks multiple people simultaneously with color-coded IDs, instantly detecting falls and sending customizable email/sound alerts per person. Features intuitive web interface, live stats dashboard, alert history, and works with webcams or IP cameras. Built with privacy-first design for homes and care facilities. Key tech: YOLO11-Pose detection + BiLSTM neural networks. Easy setup via Streamlit GUI - no technical expertise required.

Subject: 42028 Deep Learning and CNN

Room : CB11.04.300

Pod : 1

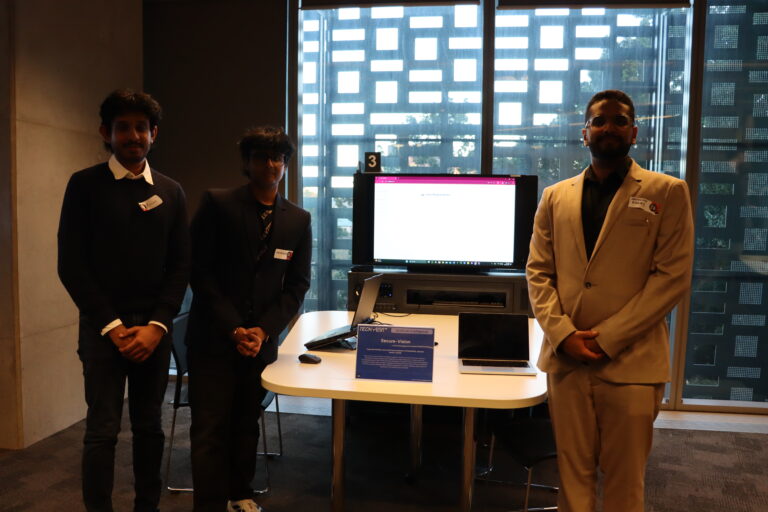

Secure-Vision

Secure Vision is an AI-driven surveillance system combining YOLOv12 for human detection, ByteTrack for object tracking, and InsightFace for facial recognition. It delivers real-time monitoring through a user-friendly Streamlit dashboard. Trained on diverse, augmented datasets, the system ensures high accuracy in complex environments. Secure Vision enhances traditional CCTV by providing intelligent alerts, identity management, and fast response, making security operations more efficient and automated.

Subject: 42028 Deep Learning and CNN

Room : CB11.04.300

Pod : 3

StainSnap

Every year, the global fashion sector emits 1.2 billion tons of greenhouse gases, & textile waste is a major contributor to environmental harm. As the world's apparel consumption is expected to increase by 63% over the next five years, sustainable solutions are becoming increasingly essential. Many garments are discarded due to stains that could be removed with proper treatment, highlighting a gap in consumer knowledge. StainSnap is an AI-powered mobile application that detects stains on clothing, providing tailored stain removal guidance to help consumers extend the lifespan of their garments

Subject: 42028 Deep Learning and CNN

Room : CB11.04.300

Pod : 4

SecondSight

SecondSight is a live hazard detection application that leverages artificial intelligence and wearable devices. The primary goal is to prevent accidents and enhance situational awareness unobtrusively for the vision impaired, improving safety and quality of life. It is designed for navigating streets and parks where small objects that often lead to accidents and go undetected by a cane. SecondSight detects these ground-level hazards in real-time using custom trained AI models, haptic feedback, and speech alerts tailored for blindness to make informed decisions and prevent incidents.

Subject: 42028 Deep Learning and CNN

Room : CB11.04.300

Pod : 5

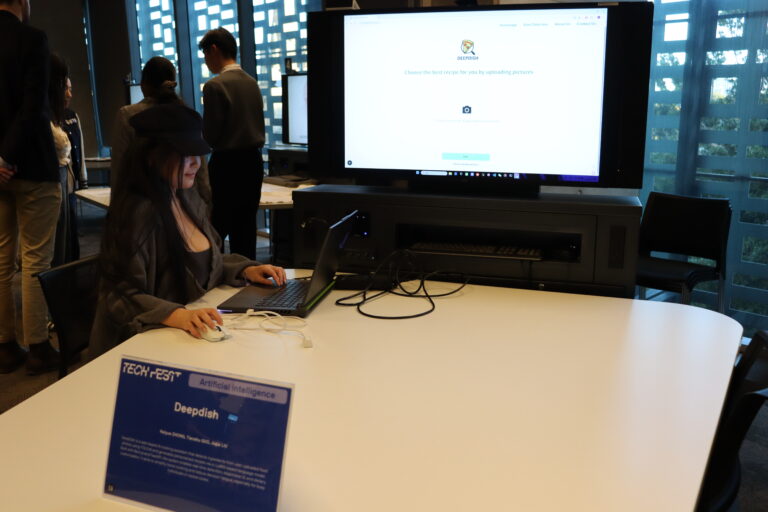

Deepdish

DeepDish is a web-based AI cooking assistant that detects ingredients from user-uploaded food photos using YOLOv8 and generates personalized recipes via a LLaMA-based language model. Built with Next.js and FastAPI, the system enables real-time detection, responsive UI, and dietary customization. It aims to simplify home cooking and reduce decision fatigue, especially for busy individuals or novice cooks.

Subject: 42028 Deep Learning and CNN

Room : CB11.04.301

Pod : 1

Smart SAR

This project details a computer vision-aided search & rescue system. It uses YOLOv12n for 120 dog breed identifications and a ResNet-based model for human facial detection. This lays the groundwork for a scalable SAR system, with future work planned for faster facial recognition and smartphone deployment.

Subject: 42028 Deep Learning and CNN

Room : CB11.04.301

Pod : 2

DriveGuard

The goal for the team is to develop a machine learning model that utilizes object detection for the purposes of detecting antisocial driving behaviors. These behaviors range from illegal u-turns, tailgating and erratic driving instances. Prior to collecting the data and building the models the team needed to fully grasp the key elements of the problem by clearly defining variables, constraints, and assumptions.

Subject: 42028 Deep Learning and CNN

Room : CB11.04.203

Pod : 1

Natural Language Processing Projects

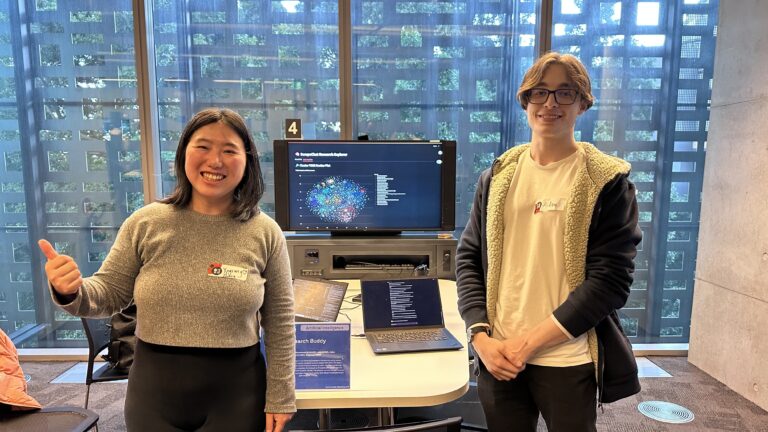

Research Buddy

Our project addresses the challenge of overwhelming academic literature by using natural language processing to organise and explore computer science papers from arXiv. We apply K-means clustering and sentence embeddings to group papers by topic, then use transformer models for summarisation and topic extraction. An interactive 2D scatter plot allows users to search, view abstracts, and explore related papers visually. This solution transforms unstructured data into a searchable, intuitive tool that helps researchers quickly find relevant publications and gain insights.

Subject: 42850 NLP Algorithms

Room : CB11.04.301

Pod : 4

InsightFlow

The integration of Natural Language Processing (NLP) in qualitative research enhances both efficiency and depth of data analysis. Our project aims to develop a all-in-one solution will automate and streamline key tasks such as theme identification, sentiment analysis, quote extraction, and interview summarisation. It can also handle large datasets, offer flexibility in qualitative analysis methods, and enable output export for offline editing. The tool will not only reduce the manual workload significantly, but also enhance both the accuracy and depth of the analysis.

Subject: 36118 Applied NLP

Room : CB11.04.301

Pod : 5

TrustCheck

We adapt the FRIES Trust Score algorithm to evaluate individual GenAI prompt-response pairs rather than entire models. Our revised method uses survey-informed weights and conditional logic to better reflect human trust perceptions. It achieves 97.23% alignment with user ratings, improving on the original’s 94.77%. The algorithm is implemented in a browser extension, enabling real-time, transparent trust evaluation of GenAI outputs.

Subject: 41079 Computing Science Studio-2

Room : CB11.04.301

Pod : 6

MailClassifier

This project uses natural language processing to automatically classify job application emails. We developed two transformer-based models—a fine-tuned BART model and a custom GPT-style model—to analyze and categorize email responses. Trained on a balanced synthetic dataset, both models achieved high accuracy, showing promise in reducing the emotional and cognitive load on job seekers. We are actively continuing development to improve real-world accuracy and plan to enhance the system with more diverse, realistic data for future deployment in job platforms or email clients.

Subject: 42850 NLP Algorithms

Room : CB11.04.301

Pod : 7

TrustRoute

Cloud-based LLMs boost automation but risk privacy when data leaves the enterprise. We propose an adaptive routing system that uses a DistilBERT-based sensitivity classifier (95% recall, 5–300 ms) to mask PII: sensitive queries go to cloud LLMs (OpenAI, Claude) and non-sensitive ones run locally on LLaMA 3.1. Compliant with Australian Privacy Principles via user consent, HTTPS, session-only storage, audit logs, and opt-in filters. Experiments confirm feasibility and efficiency.

Subject: 41004 AI/Analytics Capstone Project

Room : CB11.04.301

Pod : 8

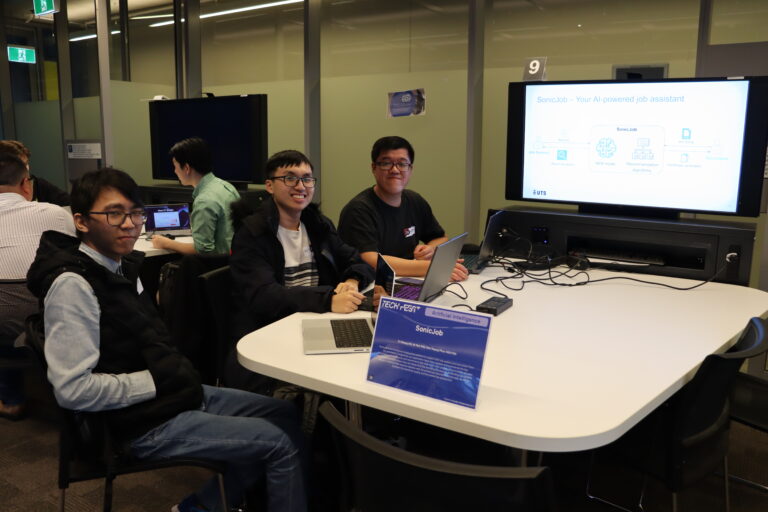

SonicJob

SonicJob is an AI-powered employment platform to support both job seekers and recruiters. From the candidates' perspective, our application helps them analyse and summarise the key information in their resume, and recommend jobs that match their profiles. For recruiters, we want to provide a platform where they can streamline the recruiting process, by shortlisting candidates based on whether their resume matches with the posted job description. Eventually, we aim to increase the workforce quality and the overall job satisfaction.

Subject: 42174 Artificial Intelligence Studio

Room : CB11.04.301

Pod : 9

GHG Monitor

This project develops an NLP-based digital consultant using Retrieval-Augmented Generation (RAG) to help companies navigate Australia’s evolving GHG regulations. The system provides tailored guidance on compliance, emission calculations, and scope definitions, enabling businesses to accurately assess and report emissions. A web-based application integrates reliable data and NLP methods to enhance accessibility and decision-making.

Subject: 36118 Applied NLP

Room : CB11.04.301

Pod : 10

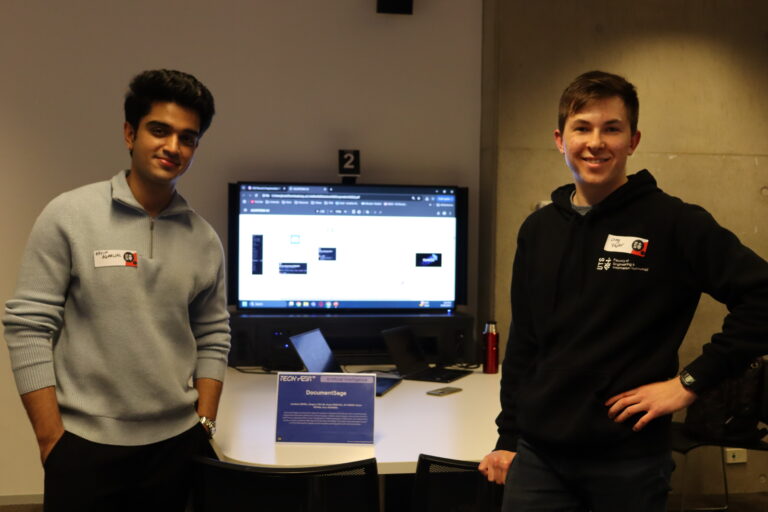

Document Sage

An LLM-assisted FAQ automation system was developed for small to medium enterprises, enabling dynamic chatbot interactions from static support documents. It supports internal and external document querying via PDF uploads, OCR, and a RAG-based backend. Integrated into Discord, it provides real-time, cost-effective support and seamless community engagement.

Subject: 41004 AI/Analytics Capstone

Room : CB11.04.203

Pod : 2

WellnessChat

The project focuses on the development of an AI-driven chatbot capable of engaging in emotionally supportive and contextually relevant conversations related to mental health. The chatbot is built on a pre-trained large language model, which is fine-tuned using the Unsloth framework on a mental health dialogue dataset. The objective is to enhance the model’s ability to respond with empathy, maintain coherence, and exhibit emotional sensitivity.

Subject: 36118 Applied NLP

Room : CB11.04.203

Pod : 3

Vision-Language Fusion Projects

VisionAid-VQA

Visual Question Answering (VQA) is a complex multimodal task that requires the integration of visual perception and natural language understanding to generate relevant answers. This work focuses on enhancing accessibility for visually impaired users by adapting VQA technology to their specific needs. We propose VisionAid-VQA, a user-centric system built by fine-tuning two state-of-the-art vision-language models—Vision and Language Transformer (ViLT) and Florence-2—on a representative subset of the VizWiz dataset, which contains real-world visual questions submitted by blind users.

Subject 32933 Research Project

Room : CB11.04.301

Pod : 3

PromptVision AI

PromptVision is an agent-based framework that uses a large language model (LLM) to coordinate multiple specialized computer vision tools. Instead of relying on a single model, it breaks down complex visual tasks into sub-tasks like detection, segmentation, inpainting, and OCR. The agent interprets user instructions, plans multi-step actions, and dynamically sequences tools. This modular approach enables solutions to advanced tasks that individual tools can’t handle alone.

Subject: 32040 Industry Project

Room : CB11.04.300

Pod : 2

Meet Our Judges

Roman Koerner

DroneShield, SFAI Team Lead

Arun Kannan

ACS, ACS NSW Board Member

Dr. Ramya Rajendran

Data Specialist, Allianz

Dr. Muhammad Saqib

CSIRO/Data61

A/Prof. Hai Yan Lu

University of Technology Sydney

Meet Our Industry Speakers

Gitarth Vaishnav

Gitarth Vaishnav is an award-winning AI engineer and University Medal recipient from UTS, currently working at DroneShield, a global leader in defence technology and AI-powered systems. With a focus on mission-critical innovation and scalable autonomy, Gitarth has been recognised nationally for his contributions to AI in defence, public safety, and humanitarian technology. He is a passionate advocate for ethical AI and continues to mentor emerging technologists through UTS, industry collaborations, and public engagement.

Cindy Chung

Cindy Chung is a board member at the Australian Computer Society, the professional association for technology workers, where she is engaged in governance at various capacity. She is an advocate for application of technology and innovation. Her team was first placed in a competition in 2023 at the Commonwealth Bank of Australia for applying AI for the benefit of community. In recent years, she has been invited to speak at the Risk Discussion Group at CPA Australia where she joins other guests in a broad range of topics that intersects risk, ethics, AI, cyber, and the tech economy.

Organizing Committee

A/Prof. Nabin Sharma

Organizing Chair and Lead

Jason Do

Coodinator, Engaged Learning

Sarah Rodriguez

Student Engagement Officer

Diego Ramirez Vargas

Casual Academic

Shudarshan Kongkham

Casual Academic

Sohitha Muthyala

Photography Assistant

Bhargav Ram Anantla

Photography Assistant

Supported By